The Hidden Cost of Letting AI Do the Talking

I feel them… don’t you? The expectations… to respond instantly, to make that message perfect, to stay on top of my mountain of emails and other messages. And so I’m grateful—I really am—for predictive text and AI tools that write for me, edit my hurried attempts, and take the edge off of messages that came out a little sharp. The rapid adoption of these tools around the world is creating no less than a revolution in the history of human communication.

But just as cars made us lazier about walking and texting has made us lazier about calling, I also wonder how this shift is going to worsen our dependency on machines and, consequently, our social isolation (for more on that, see my article, “Hej! Howdy! and Other Small Steps in the Right Direction”).

The 2013 movie, Her, opens with a scene of Joaquin Phoenix writing love letters and greeting cards for his employer’s customers because he can write better ones than those who know their loved ones intimately (or do they?). Twelve years later, Large Language Models (LLMs) can write these love letters for us for free, or even better ones for a fee. They can also write emails to our boss, our colleagues, the leads we’d like to convert to clients, or the people we’d like to convert to our way of seeing the world.

In Her, Joaquin’s use of technology comes at a price—which I won’t spoil for you in case you still need to see it. In real life, what will be the cost we pay for outsourcing our communication to AI? How will reliance on LLMs reshape our ability to connect authentically, communicate effectively, and understand one another?

AI’s Impressively Imperfect Mimicry

LLMs have a remarkable capacity for generating coherent, correctly-spelled and punctuated responses, undoubtedly improving the clarity of written communication. They can bridge language barriers through real-time translation and tailor messages to specific audiences and contexts. Trained on immense datasets of human text, LLMs are learning to emulate human communication patterns with increasing sophistication.

Perhaps most impressively, research indicates that LLMs can exhibit elements of cognitive empathy—the ability to recognize emotions in written communication and provide contextually appropriate, supportive responses. Studies evaluating models like ChatGPT-3.5 and GPT-4 have found they can perform well on empathy-related tasks, sometimes even being preferred over human responses in specific scenarios.

There is an important difference, however, between cognitive empathy (understanding feelings) and affective empathy (genuinely experiencing or sharing those feelings). LLMs, lacking the ability to have genuine emotional experiences, excel at the former but are incapable of the latter. Their sympathetic responses are sophisticated pattern matching, not felt understanding. LLMs do not “understand” text in the human sense of that word; their responses are based on statistical patterns in data, not true comprehension.

The Costs of Outsourcing Communication

Note: what follows is not a call to abandon the use of LLMs or other AI tools. That horse has left the barn. It is a note of caution about the unintended, negative consequences of this revolution that we are only just beginning to see.

So, let’s say that LLMs can mimic empathic human communication perfectly, or will soon be able to; a dependency on AI to write for me also has a dark side: the loss of authenticity and the uniqueness of my voice. If you’ve used LLMs at all, you’ve likely noticed that the outputs generated or influenced by LLMs often feel robotic, impersonal, and generic. AI tools are prone to clichés, formulaic language, and hackneyed phrases (“In today’s rapidly changing world…”, “Harnessing the power of…”) that signal machine learning rather than original thought. Unique elements like personal anecdotes, contextual understanding, timing, vulnerability, and emotional nuance—qualities that build connection and convey sincerity—are lost on AI (at least for now).

The result is an authenticity deficit. Communication that feels overly polished, automated, or otherwise inauthentic erodes trust and the writer’s reputation. People connect with perceived effort, personality, and sincerity—attributes that AI cannot (yet) replicate convincingly. When communication feels robotic, it creates distance, making the sender seem disconnected, predictable, and even tone-deaf.

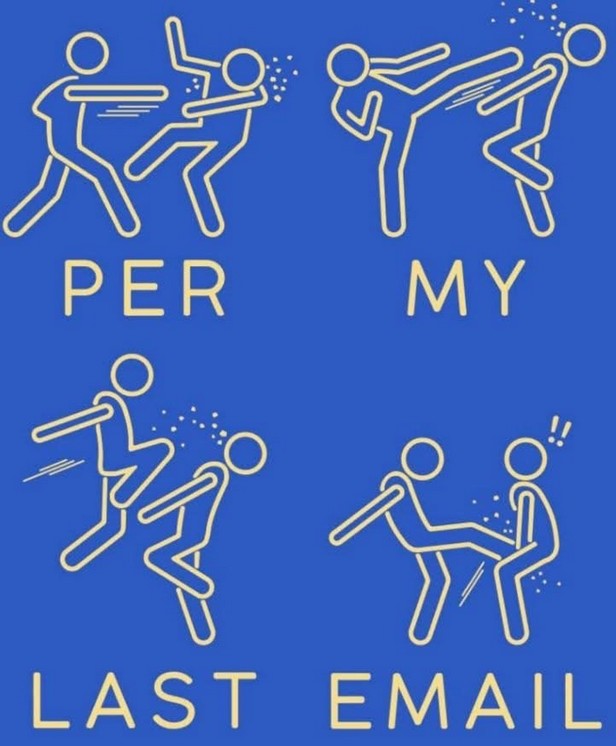

Research reveals an intriguing authenticity paradox. While using AI-generated “smart replies” can increase communication speed and emotional positivity, the knowledge or suspicion that AI is involved triggers significantly negative perceptions in the reader. People evaluate communication partners they suspect are using AI as less cooperative, less affiliative (less interested in connection), and more dominant, even if the AI objectively improved certain metrics of communication (e.g., positivity). In other words, the upside of AI-assisted writing may be outweighed by distrust and other downsides. The perceived lack of genuine thought or intention behind AI-generated messages negates the efficiency gains. The process and perceived source of the communication appear to matter as much, if not more, than the polished output itself.

More importantly, human communication does much more than just convey information. Kindness, constructive feedback, empathy, social nuances, active listening, and expressing oneself honestly are not innate talents but abilities developed over time through interaction, effort, and practice. These skills are vital for building strong relationships, fostering understanding, and maintaining personal well-being, and must be skills that humans can perform in-person and verbally, not merely in writing.

Reliance on LLMs (as opposed to the use of them) carries the risk of dependency and downskilling. The concern here is that AI-dependency in our digital communication can spill over into our face-to-face interactions as well, limiting our capacity for unassisted, real-world conversations. Consistently outsourcing communication to AI limits our own opportunities for practice in the same way that my son delegating his subtraction problems to Alexa limits his opportunity to learn. Outsourcing our communication to LLMs becomes a crutch that limits our personal capabilities, diminishing our capacity to communicate independently and our adaptability when faced with novel situations.

Several skills seem particularly vulnerable to downskilling:

- Clarity and Expression: Frequently relying on AI to generate text weakens our ability to articulate thoughts clearly, structure arguments coherently, and find our authentic voice without assistance. Students have even confessed to noticing a decline in their essay-writing abilities due to a reliance on LLMs.

- Emotional Nuance and Empathy: Crafting genuinely empathetic messages and interpreting subtle emotional cues requires practice. Outsourcing these tasks to AI, which lacks genuine emotional depth, reduces our engagement with these complexities of human communication.

- Critical Thinking and Problem Solving: Using LLMs as decision-making aids carries the risk of becoming overly dependent on AI recommendations without fully understanding the rationale. This can impair our ability to evaluate information critically and solve problems without the aid of AI.

Gaining an Edge Without Losing Ourselves

The future that AI-assisted communication offers to humanity is both promising and concerning—it can be both. The path forward may lead to a brighter future, if the steps forward are taken mindfully. This will require a conscious effort to engage critically with AI-generated content, while prioritizing genuine human connection. Leveraging AI’s abilities in data analysis, research, and for automating redundant tasks can free up time for creativity and interpersonal investments.

That said, it’s important that we preserve and celebrate the strengths that are uniquely human in communication, including genuine empathy born from shared experience, spontaneous creativity, and the capacity to read between the lines. And, heeding the advice of the World Health Organization, we must make a conscious effort to prioritize opportunities for direct, unmediated social connection through face-to-face interactions, virtual meetings, and old-fashioned phone calls.

All this will require a kind of digital mindfulness. We must become more aware of when and why we’re drawn to seek AI’s assistance. Developing self-awareness regarding potential dependence or the subtle signs of skill erosion is essential for navigating this new landscape responsibly.

AI is a powerful tool—one that also shapes its user. We need not fear technology so long as we can wield it intelligently. Combining AI’s efficiency with our creativity and critical judgment, we can upskill our social interactions while safeguarding what makes communication authentically human.

Choose a Subscription

Shop +wellvyl APPAREL