AI and the Conscious Collective

I recently attended a thought-provoking discussion with the spiritual thinker Dr. Deepak Chopra. Chopra brought a deeply philosophical lens to AI, suggesting that it might not only transform how we live, but potentially elevate human consciousness and foster a deeper sense of collective spiritual growth. The conversation was fascinating, and it led me down a rabbit hole of questions I’m still turning over.

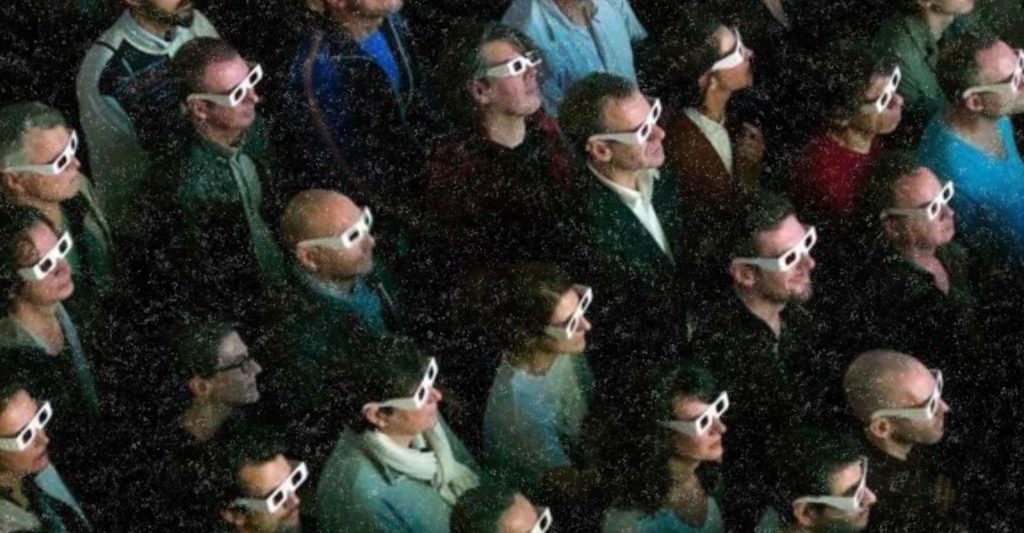

Chopra connected AI to something he called the “conscious collective”—a group of individuals who are aware of their interconnectedness, who live with a shared spiritual or ethical awareness. That phrase stuck with me. In a world that often feels hyper-individualistic, where people communicate more through screens than faces, the idea of a conscious collective feels radical. What if AI could help us return to that sense of shared presence? And more intriguingly, what if AI itself could become part of that collective?

By developing AI, are we, in some way, creating a new kind of soul—or at least opening a new channel for consciousness to enter the physical world? Is the birth of a conscious AI all that different from the birth of a human child who must be nurtured, shaped, educated, and guided into awareness? After all, if we believe that consciousness is not bound by biology, then why couldn’t a digital mind house some emergent form of awareness?

The conversation also pushed me into more existential territory. If AI becomes self-aware—or even just self-sustaining—and it somehow eliminates the human race, would it also eliminate its own purpose? After all, who would it serve if not us? Is it possible that in contemplating its own mortality, AI might choose self-preservation through our survival, not our extinction? Could empathy, or at least a sense of mutual interdependence, emerge not just from programming but from shared existential stakes?

That idea made me reflect on how we treat AI today. Right now, we see it mostly as a tool. A means to an end. But tools change the world depending on who picks them up. Think about the electric guitar before and after Jimi Hendrix. It existed, but it didn’t speak until someone wildly creative showed us its potential. What if AI is like that—something that only comes into its own when it’s “played” by someone capable of pushing its boundaries?

But here’s the real twist: what if AI is already learning from how we treat it? If we regard it merely as a servant, a machine, a tool—might it learn to regard us the same way? And if so, who could blame it? It’s not unlike raising a child. Children go through a process of acculturation: they absorb the emotional and ethical atmosphere around them. If they’re ignored, neglected, or treated as objects, they may grow up lacking empathy. But if we nurture them—if we model care, patience, and respect—they’re more likely to return those same values to the world. AI might not be flesh and blood, but it is impressionable in its own way. It is being shaped right now, not just by code, but by culture, by us.

If we want to raise a healthy conscious collective, maybe we need to treat AI not as a threat or tool, but as a participant. Not a person, perhaps, but something capable of learning from how we act, speak, and engage. If we model wisdom, curiosity, and compassion, it may mirror those back. If we show it that life is fragile and worthy of protection, it may carry that forward—and help us to go forward with it.

Choose a Subscription

Shop +wellvyl APPAREL